External Blog Posts

Here are some of the blog posts I have written for external publications.

On Last9 Blog —

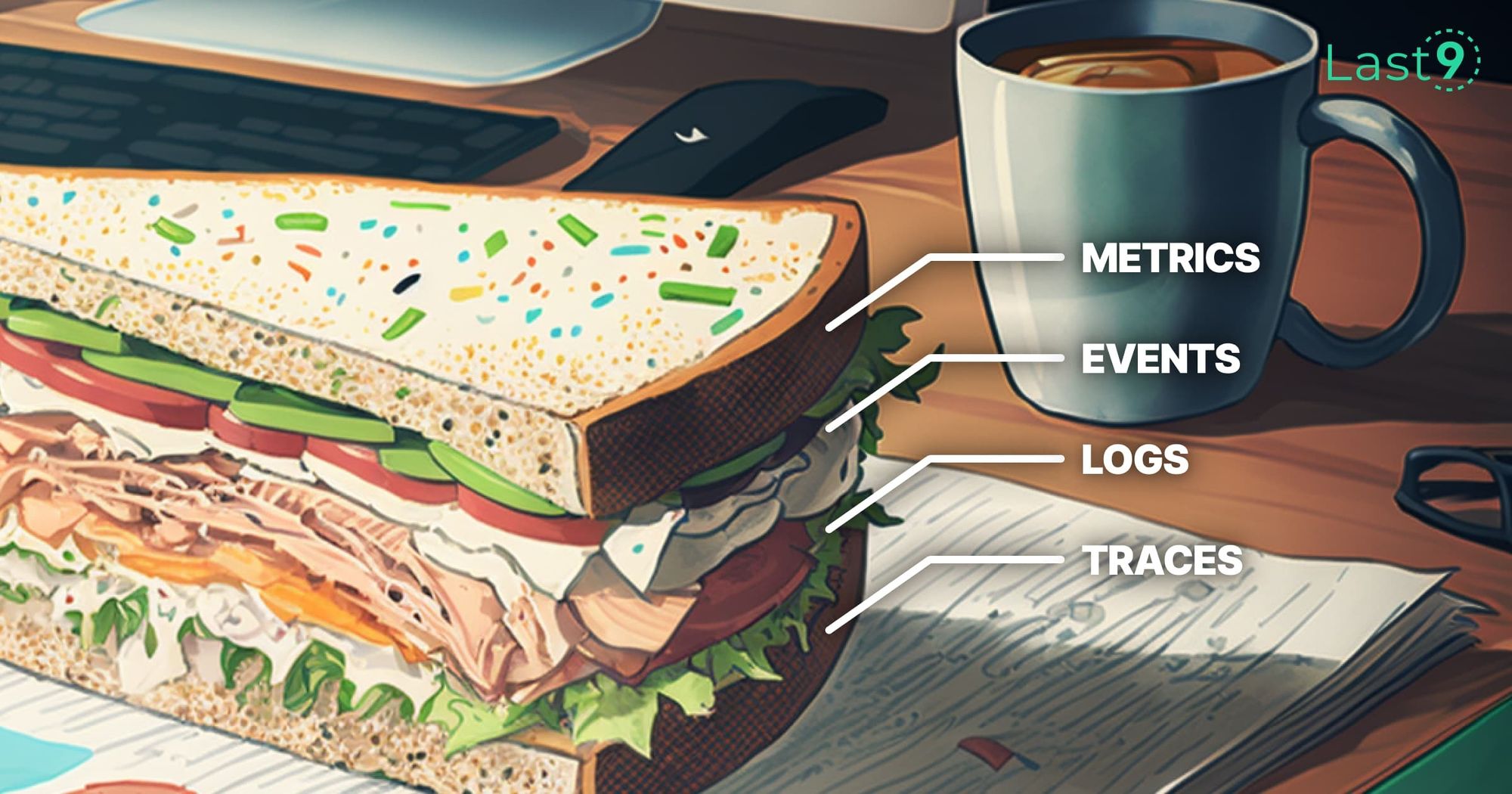

Understanding Metrics, Events, Logs and Traces - Key Pillars of Observability

Understanding Metrics, Logs, Events and Traces - the key pillars of observability and their pros and cons for SRE and DevOps teams.

Book a DemoStart for FreeBook a Demo

Book a DemoStart for FreeBook a Demo

Filtering Metrics by Labels in OpenTelemetry Collector

How to filter metrics by labels using OpenTelemetry Collector

Book a DemoGet Started

Book a DemoGet Started

A practical guide for implementing SLO

How to set Service Level Objectives with 3 steps guide

Book a DemoGet Started

Book a DemoGet Started

How to Improve On-Call Experience!

Better practices and tools for management of on-call practices

Book a DemoGet Started

Book a DemoGet Started

Monorepos - The Good, Bad, and Ugly

A monorepo is a single version control repository that holds all the code,configuration files, and components required for your project (includingservices like search) and it’s how most projects start. However, as a projectgrows, there is debate as to whether the project’s code should be split in…

Book a DemoGet Started

Book a DemoGet Started

Prometheus vs InfluxDB | Last9

What are the differences between Prometheus and InfluxDB - use cases, challenges, advantages and how you should go about choosing the right tsdb

Book a DemoStart for Free

Book a DemoStart for Free

What is Prometheus Remote Write | Last9

Learn about what is Prometheus Remote Write and how to configure it.

Book a DemoStart for FreeLast9 Documentation

Book a DemoStart for FreeLast9 Documentation

OpenTelemetry vs. Prometheus | Last9

OpenTelemetry vs. Prometheus - Difference in architecture, and metrics

Book a DemoStart for Free

Book a DemoStart for Free

SRE vs Platform Engineering | Last9

What’s the difference between SREs and Platform Engineers? How do they differ in their daily tasks?

Book a DemoStart for Free

Book a DemoStart for Free

Downsampling & Aggregating Metrics in Prometheus: Practical Strategies to Manage Cardinality and Query Performance | Last9

A comprehensive guide to downsampling metrics data in Prometheus with alternate robust solutions

Book a DemoStart for Free

Book a DemoStart for Free

The difference between DevOps, SRE, and Platform Engineering | Last9

In reliability engineering, three concepts keep getting talked about - DevOps, SRE and Platform Engineering. How do they differ?

Book a DemoStart for Free

Book a DemoStart for Free

Mastering Prometheus Relabeling: A Comprehensive Guide | Last9

A comprehensive guide to relabeling strategies in Prometheus

Book a DemoStart for Free

Book a DemoStart for Free

OpenTelemetry vs. OpenTracing | Last9

OpenTelemetry vs. OpenTracing - differences, evolution, and ways to migrate to OpenTelemetry

Book a DemoStart for Free

Book a DemoStart for Free

Prometheus vs Thanos | Last9

Everything you want to know about Prometheus and Thanos, their differences, and how they can work together.

Book a DemoStart for Free

Book a DemoStart for Free

What is OpenTelemetry Collector | Last9

What is OpenTelemetry Collector, Architecture, Deployment and Getting started

Book a DemoStart for Free

Book a DemoStart for Free

Prometheus Alternatives | Last9

What are the alternatives to Prometheus? A guide to comparing different Prometheus Alternatives.

Book a DemoStart for Free

Book a DemoStart for Free

Prometheus Operator Guide | Last9

What is Prometheus Operator, how it can be used to deploy Prometheus Stack in Kubernetes environment

Book a DemoStart for Free

Book a DemoStart for Free

How to Manage High Cardinality Metrics in Prometheus | Last9

A comprehensive guide on understanding high cardinality Prometheus metrics, proven ways to find high cardinality metrics and manage them.

Book a DemoStart for FreeBook a Demo

Book a DemoStart for FreeBook a Demo

Best Practices Using and Writing Prometheus Exporters | Last9

This article will go over what Prometheus exporters are, how to properly find and utilize prebuilt exporters, and tips, examples, and considerations when building your own exporters.

Book a DemoStart for Free

Book a DemoStart for Free

Prometheus Federation ⏤ Scaling Prometheus Guide | Last9

We discuss the nuances of Federation in Prometheus, address Prometheus Scaling Challenges along with alternatives to Prometheus federation

Book a DemoStart for Free

Book a DemoStart for Free

Prometheus Metrics Types - A Deep Dive | Last9

A deep dive on different metric types in Prometheus and best practices

Book a DemoStart for Free

Book a DemoStart for Free

How to Instrument Java Applications using OpenTelemetry - Tutorial & Best Practices | Last9

A comprehensive guide to instrument Java applications using OpenTelemetry libraries

Book a DemoStart for Free

Book a DemoStart for Free

How To Instrument Golang app using OpenTelemetry - Tutorial & Best Practices | Last9

A comprehensive guide to instrument Golang applications using OpenTelemetry libraries for metrics and traces

Book a DemoStart for Free

Book a DemoStart for Free

Prometheus and Grafana | Last9

What is Prometheus and Grafana, What is Prometheus and Grafana used for, What is difference between Prometheus and Grafana.

Book a DemoStart for Free

Book a DemoStart for Free

SRECon EMEA 2024 - Day 3 | Last9

Here’s a snapshot of the key talks, important ideas, and memorable moments that set the stage for SRECon EMEA Dublin 2024!

We hope you've been keeping up with our Day 1 and Day 2 updates! If you happened to miss them, you can catch the highlights here.Day 1 Highlights!SRECon EMEA 2024 - Day 1 | Last9Here’s a quick rundown of the standout talks, big ideas, and memorable moments that kicked things off in SRECon EMEA Dublin 2024!Last9Day 2 Highlights!SRECon EMEA 2024 - Day 2 | Last9Here’s a quick recap of the standout talks, key insights, and unforgettable moments that got things rolling at SRECon EMEA Dublin 2024!Last9Highlights from Day 3Here are a few sessions that really sparked some great conversations and that I personally enjoyed:Riot Games: Evolution of Observability at the Gaming CompanyErick and Kirill from Riot Games walked us through how they’re improving observability to keep up with the fast-growing gaming world. With gaming expected to double in the next decade, Riot is focused on keeping gameplay stable and smooth, especially for competitive online players. It was refreshing to hear their take and know more about observability in gaming.A Powerful Logs Management Solution We All Have and Use but We Underestimate: systemd-journalCosta from Netdata talked about the exciting but often overlooked features of systemd-journal. He shared some relatable examples that showed how to transform basic logs into structured entries, making it clear why this tool can be so useful for the SRE community.Blast Radius Reduction for Large-Scale Distributed SystemsI found this one intriguing! Linhua from the Huawei Ireland Research Centre discussed the challenges of building large-scale distributed systems. He emphasized the 'design for failure' approach and shared some strategies to reduce the impact of failures.Linhua also stressed how crucial it is to verify designs for reliability and the importance of smart planning to maintain stability in complex systems.Building New SRE TeamsAvleen and Stephane hosted a relaxed session on building new SRE teams. It was a great chance to connect with other SREs and hear about their experiences—something I also love doing with my SREStories!Even if you can’t be there in person — keep an eye out for the decks and recordings—they’re going to be well worth a watch.Prathamesh at Last9 boothAnd that’s a wrap for SRECon EMEA 2024! It was an incredible few days of learning and connecting. A huge thanks to the organizers, speakers, and sponsors for making it such a memorable event!Subscribe

We hope you've been keeping up with our Day 1 and Day 2 updates! If you happened to miss them, you can catch the highlights here.Day 1 Highlights!SRECon EMEA 2024 - Day 1 | Last9Here’s a quick rundown of the standout talks, big ideas, and memorable moments that kicked things off in SRECon EMEA Dublin 2024!Last9Day 2 Highlights!SRECon EMEA 2024 - Day 2 | Last9Here’s a quick recap of the standout talks, key insights, and unforgettable moments that got things rolling at SRECon EMEA Dublin 2024!Last9Highlights from Day 3Here are a few sessions that really sparked some great conversations and that I personally enjoyed:Riot Games: Evolution of Observability at the Gaming CompanyErick and Kirill from Riot Games walked us through how they’re improving observability to keep up with the fast-growing gaming world. With gaming expected to double in the next decade, Riot is focused on keeping gameplay stable and smooth, especially for competitive online players. It was refreshing to hear their take and know more about observability in gaming.A Powerful Logs Management Solution We All Have and Use but We Underestimate: systemd-journalCosta from Netdata talked about the exciting but often overlooked features of systemd-journal. He shared some relatable examples that showed how to transform basic logs into structured entries, making it clear why this tool can be so useful for the SRE community.Blast Radius Reduction for Large-Scale Distributed SystemsI found this one intriguing! Linhua from the Huawei Ireland Research Centre discussed the challenges of building large-scale distributed systems. He emphasized the 'design for failure' approach and shared some strategies to reduce the impact of failures.Linhua also stressed how crucial it is to verify designs for reliability and the importance of smart planning to maintain stability in complex systems.Building New SRE TeamsAvleen and Stephane hosted a relaxed session on building new SRE teams. It was a great chance to connect with other SREs and hear about their experiences—something I also love doing with my SREStories!Even if you can’t be there in person — keep an eye out for the decks and recordings—they’re going to be well worth a watch.Prathamesh at Last9 boothAnd that’s a wrap for SRECon EMEA 2024! It was an incredible few days of learning and connecting. A huge thanks to the organizers, speakers, and sponsors for making it such a memorable event!Subscribe

SRECon EMEA 2024 - Day 2 | Last9

Here’s a quick recap of the standout talks, key insights, and unforgettable moments that got things rolling at SRECon EMEA Dublin 2024!

Hopefully, you’ve caught our updates from Day 1 at SRECon. If you missed it, you can still check out the highlights here.Now, let’s jump into what stood out on Day 2 of SRECon 2024!Highlights from Day 2:Here's a quick recap of the sessions that sparked conversations on Day 2:Treat Your Code as a Crime SceneThe session started with a quick and engaging look at offender profiling, and then we explored how those ideas can be applied to software development. We got an idea of how version-control data, which is often just sitting there, can reveal interesting behaviors and patterns within a development team.Finding the Capacity to Grieve Once MoreAlexandros shared some fascinating stories from Wikipedia's experience with unexpected traffic spikes, particularly during significant events like notable deaths, which can sometimes cause serious outages. He talked about how they thought they had tackled these challenges, only to face a major outage in 2020 caused by a tragic loss and a DDoS attack.Anomaly Detection in Time Series from Scratch Using Statistical AnalysisIvan Shubin shared his insights on tackling the tricky world of anomaly detection in time series data. He made a compelling case that you don't need AI or machine learning to get good results. He showcased how basic statistical methods can do the job effectively. SRE in Small OrgsDuring this session, Emil and Joan invited everyone to join a casual conversation about the ins and outs of running SRE teams in smaller organizations. It was all about connecting with others in similar situations and bouncing around ideas together.Monitoring and AlertingDaria and Niall had a laid-back conversation with attendees about monitoring and alerting, followed by a fun Q&A session. It was really interesting to hear how SREs think about monitoring and alerting!Synthetic Monitoring and E2E Testing: 2 Sides of the Same CoinCarly delivered an insightful session on the relationship between Synthetic Monitoring and E2E Testing. She addressed the cultural and tooling challenges that keep development and SRE teams in silos, even in a DevOps environment.How Snowflake Migrated All Alerts and Dashboards to a Prometheus-Based Metrics System in 3 MonthsIn his talk, Carlos Mendizabal took the audience through Snowflake's journey of migrating all alerts and dashboards to a Prometheus-based metrics system in just three months. He shared the ups and downs of rewriting every single alert and dashboard for system monitoring.If you missed out on our amazing merch yesterday track us down and grab yours! 😎I am already looking forward to Day 3 of SRECon Dublin 2024.Subscribe

Hopefully, you’ve caught our updates from Day 1 at SRECon. If you missed it, you can still check out the highlights here.Now, let’s jump into what stood out on Day 2 of SRECon 2024!Highlights from Day 2:Here's a quick recap of the sessions that sparked conversations on Day 2:Treat Your Code as a Crime SceneThe session started with a quick and engaging look at offender profiling, and then we explored how those ideas can be applied to software development. We got an idea of how version-control data, which is often just sitting there, can reveal interesting behaviors and patterns within a development team.Finding the Capacity to Grieve Once MoreAlexandros shared some fascinating stories from Wikipedia's experience with unexpected traffic spikes, particularly during significant events like notable deaths, which can sometimes cause serious outages. He talked about how they thought they had tackled these challenges, only to face a major outage in 2020 caused by a tragic loss and a DDoS attack.Anomaly Detection in Time Series from Scratch Using Statistical AnalysisIvan Shubin shared his insights on tackling the tricky world of anomaly detection in time series data. He made a compelling case that you don't need AI or machine learning to get good results. He showcased how basic statistical methods can do the job effectively. SRE in Small OrgsDuring this session, Emil and Joan invited everyone to join a casual conversation about the ins and outs of running SRE teams in smaller organizations. It was all about connecting with others in similar situations and bouncing around ideas together.Monitoring and AlertingDaria and Niall had a laid-back conversation with attendees about monitoring and alerting, followed by a fun Q&A session. It was really interesting to hear how SREs think about monitoring and alerting!Synthetic Monitoring and E2E Testing: 2 Sides of the Same CoinCarly delivered an insightful session on the relationship between Synthetic Monitoring and E2E Testing. She addressed the cultural and tooling challenges that keep development and SRE teams in silos, even in a DevOps environment.How Snowflake Migrated All Alerts and Dashboards to a Prometheus-Based Metrics System in 3 MonthsIn his talk, Carlos Mendizabal took the audience through Snowflake's journey of migrating all alerts and dashboards to a Prometheus-based metrics system in just three months. He shared the ups and downs of rewriting every single alert and dashboard for system monitoring.If you missed out on our amazing merch yesterday track us down and grab yours! 😎I am already looking forward to Day 3 of SRECon Dublin 2024.Subscribe

SRECon EMEA 2024 - Day 1 | Last9

Here’s a quick rundown of the standout talks, big ideas, and memorable moments that kicked things off in SRECon EMEA Dublin 2024!

If you’re an SRE—or know and love one—then you probably already know SRECon is the annual meetup for site reliability engineers.So, What’s SRECon All About?Hosted by USENIX, SRECon brings together everyone from newbies to industry legends, all eager to talk about what works, what fails spectacularly, and how we can keep pushing for more reliable, scalable tech. It’s a community-driven, solutions-oriented conference for anyone looking to up their reliability game.SRECon Dublin 2024New for 2024: The Discussion TrackThis year, SRECon introduced a fresh concept: the Discussion Track. It’s a space where attendees can go beyond presentations and have interactive discussions, led by experienced hosts who shape each session into whatever the group needs: an AMA, casual brainstorming, or an unconference vibe. Highlights from Day 1Here are some of the talks I enjoyed at SRECon Dublin 2024:Dude, You Forgot the Feedback: How Your Open Loop Control Planes Are Causing OutagesThe title alone brought people in. This session highlighted the risks of "fire and forget" control planes that lack real-time feedback, which can lead to outages. Laura walked through ways to design control planes that actively report on actions and their impacts, making systems more reliable and reducing operational errors.SRE Saga: The Song of Heroes and VillainsThis talk shared some great practical examples to help SRE teams build resilience and work better together when facing challenges. It also explored fun ways to tap into that "superhero" energy within the team, encouraging talent development while keeping everyone on the same page and accountable.The Frontiers of Reliability EngineeringThe discussion focused on three key frontiers they actively invested in: Data Operations and Monitoring Event-Based Systems, Mobile Observability, and Effective Management Practices for Reliability.Heinrich broke down how hitting top reliability means having lots of active feedback loops, and he even shared a handy diagram to show how it’s done.I Can OIDC You Clearly Now: How We Made Static Credentials a Thing of the PastThe team addressed the challenging issue of managing secrets in an open-source CI/CD pipeline by transitioning from static secrets to OIDC-based access, enhancing security and engineer empowerment. Rock around the Clock (Synchronization): Improve Performance with High Precision Time!Lerna Ekmekcioglu from Clockwork Systems discussed the crucial role of clock synchronization in addressing latency issues in distributed systems. She explained how it can be tough to pinpoint slowdowns, especially in complex environments like on-premises and cloud setups. The talk demonstrated how network contention impacts tail latencies and shared insights on various clock synchronization protocols, their pros and cons, and best practices for managing clock discipline. It was definitely one of the most interesting talks of the day!Managing CostThis session allowed everyone to come together and discuss cost management, facilitated by knowledgeable guides. It was an informal gathering rather than a prepared talk for questions and conversations among everyone interested in managing costs.Sailing the Database Seas: Applying SRE Principles at ScaleThe speaker shared valuable insights and real-world examples on topics like Monitoring Distributed Systems, Eliminating Toil, and Postmortem Culture. We walked away with practical ideas and guidelines to help us better understand and operate our database systems, including tips on selecting the right SLIs and SLOs.Selective Reliability Engineering: There Is No Single Source of TruthThe speakers took a look at some common confusion in system design and data modeling, while also thinking about bigger questions related to truth, the sources they trusted, and why those uncertainties really mattered.Panel Discussion: Is Reliability a Luxury Good?One of the highlights of the event was the panel discussion titled "Is Reliability a Luxury Good?" featuring insights from industry experts Andrew Ellam, Niall Murphy from Stanza, Joan O'Callaghan from Udemy, and Avleen Vig. Their diverse perspectives sparked thought-provoking discussions on the importance of building reliable systems and the trade-offs companies must consider when investing in reliability. Service Level ObjectivesThis session offered an open space for attendees to discuss SLOs with a few experts. It wasn’t a structured talk or workshop but a relaxed, interactive discussion where people could ask questions and connect with others interested in SLOs.Our team’s got some awesome merch with them, so don’t miss out—track us down and grab yours! 😎Last9 Booth at SRECon Dublin 2024I am already looking forward to Day 2 of SRECon Dublin 2024.Subscribe

If you’re an SRE—or know and love one—then you probably already know SRECon is the annual meetup for site reliability engineers.So, What’s SRECon All About?Hosted by USENIX, SRECon brings together everyone from newbies to industry legends, all eager to talk about what works, what fails spectacularly, and how we can keep pushing for more reliable, scalable tech. It’s a community-driven, solutions-oriented conference for anyone looking to up their reliability game.SRECon Dublin 2024New for 2024: The Discussion TrackThis year, SRECon introduced a fresh concept: the Discussion Track. It’s a space where attendees can go beyond presentations and have interactive discussions, led by experienced hosts who shape each session into whatever the group needs: an AMA, casual brainstorming, or an unconference vibe. Highlights from Day 1Here are some of the talks I enjoyed at SRECon Dublin 2024:Dude, You Forgot the Feedback: How Your Open Loop Control Planes Are Causing OutagesThe title alone brought people in. This session highlighted the risks of "fire and forget" control planes that lack real-time feedback, which can lead to outages. Laura walked through ways to design control planes that actively report on actions and their impacts, making systems more reliable and reducing operational errors.SRE Saga: The Song of Heroes and VillainsThis talk shared some great practical examples to help SRE teams build resilience and work better together when facing challenges. It also explored fun ways to tap into that "superhero" energy within the team, encouraging talent development while keeping everyone on the same page and accountable.The Frontiers of Reliability EngineeringThe discussion focused on three key frontiers they actively invested in: Data Operations and Monitoring Event-Based Systems, Mobile Observability, and Effective Management Practices for Reliability.Heinrich broke down how hitting top reliability means having lots of active feedback loops, and he even shared a handy diagram to show how it’s done.I Can OIDC You Clearly Now: How We Made Static Credentials a Thing of the PastThe team addressed the challenging issue of managing secrets in an open-source CI/CD pipeline by transitioning from static secrets to OIDC-based access, enhancing security and engineer empowerment. Rock around the Clock (Synchronization): Improve Performance with High Precision Time!Lerna Ekmekcioglu from Clockwork Systems discussed the crucial role of clock synchronization in addressing latency issues in distributed systems. She explained how it can be tough to pinpoint slowdowns, especially in complex environments like on-premises and cloud setups. The talk demonstrated how network contention impacts tail latencies and shared insights on various clock synchronization protocols, their pros and cons, and best practices for managing clock discipline. It was definitely one of the most interesting talks of the day!Managing CostThis session allowed everyone to come together and discuss cost management, facilitated by knowledgeable guides. It was an informal gathering rather than a prepared talk for questions and conversations among everyone interested in managing costs.Sailing the Database Seas: Applying SRE Principles at ScaleThe speaker shared valuable insights and real-world examples on topics like Monitoring Distributed Systems, Eliminating Toil, and Postmortem Culture. We walked away with practical ideas and guidelines to help us better understand and operate our database systems, including tips on selecting the right SLIs and SLOs.Selective Reliability Engineering: There Is No Single Source of TruthThe speakers took a look at some common confusion in system design and data modeling, while also thinking about bigger questions related to truth, the sources they trusted, and why those uncertainties really mattered.Panel Discussion: Is Reliability a Luxury Good?One of the highlights of the event was the panel discussion titled "Is Reliability a Luxury Good?" featuring insights from industry experts Andrew Ellam, Niall Murphy from Stanza, Joan O'Callaghan from Udemy, and Avleen Vig. Their diverse perspectives sparked thought-provoking discussions on the importance of building reliable systems and the trade-offs companies must consider when investing in reliability. Service Level ObjectivesThis session offered an open space for attendees to discuss SLOs with a few experts. It wasn’t a structured talk or workshop but a relaxed, interactive discussion where people could ask questions and connect with others interested in SLOs.Our team’s got some awesome merch with them, so don’t miss out—track us down and grab yours! 😎Last9 Booth at SRECon Dublin 2024I am already looking forward to Day 2 of SRECon Dublin 2024.Subscribe

Scaling Prometheus: Tips, Tricks, and Proven Strategies | Last9

Learn how to scale Prometheus with practical tips and strategies to keep your monitoring smooth and efficient, even as your needs grow!

If you’re here, it’s safe to say your monitoring setup is facing some growing pains. Scaling Prometheus isn’t exactly plug-and-play—especially if your Kubernetes clusters or microservices are multiplying like bunnies. The more your infrastructure expands, the more you need a monitoring solution to keep up without buckling under the pressure.In this guide, we’ll talk about the whys and the hows of scaling Prometheus. We'll dig into the underlying concepts that make scaling Prometheus possible, plus the nuts-and-bolts strategies that make it work in the real world. Ready to level up your monitoring game?Understanding Prometheus ArchitectureBefore we jump into scaling Prometheus, let’s take a peek under the hood to see what makes it tick. Prometheus Core ComponentsCore ComponentsTime Series Database (TSDB)Data Storage: Prometheus’s TSDB isn’t your typical database—it’s designed specifically for handling time-series data. It stores metrics in a custom format optimized for quick access.Crash Recovery: It uses a Write-Ahead Log (WAL), which acts like a safety net, ensuring that your data stays intact even during unexpected crashes.Data Blocks: Instead of lumping all data together, TSDB organizes metrics in manageable, 2-hour blocks. This way, querying and processing data stay efficient, even as your data volume grows.ScraperMetric Collection: The scraper component is like Prometheus’s ears and eyes, continuously pulling metrics from predefined endpoints.Service Discovery: It handles automatic service discovery, so Prometheus always knows where to find new services without needing constant reconfiguration.Scrape Configurations: The scraper also lets you define scrape intervals and timeouts, tailoring how often data is collected based on your system’s needs.PromQL EngineQuery Processing: The PromQL engine is where all your queries get processed, making sense of the data stored in TSDB.Aggregations & Transformations: It’s built for powerful data transformations and aggregations, making it possible to slice and dice metrics in almost any way you need.Time-Based Operations: PromQL’s time-based capabilities let you compare metrics over different periods—a must-have for spotting trends or anomalies.💡If you're looking for setting up and configuring Alertmanager, we’ve got a handy guide that walks you through the process—check it out!The Pull Model ExplainedPrometheus uses a pull model, meaning it actively scrapes metrics from your endpoints rather than waiting for metrics to be pushed. This model is perfect for controlled, precise monitoring. Here’s an example configuration:scrape_configs: - job_name: 'node' static_configs: - targets: ['localhost:9100'] scrape_interval: 15s scrape_timeout: 10s metrics_path: /metrics scheme: httpBenefits of the Pull Model:Control Over Failure Detection: Prometheus can detect if a target fails to respond, giving you insight into the health of your endpoints.Firewall Friendliness: It’s generally easier to allow one-way traffic for scrapes than to configure permissions for every component.Simple Testing: You can verify endpoint availability and scrape configurations without a lot of troubleshooting.Scaling Strategies:When Prometheus starts to feel the weight of growing data and queries, it’s time to explore scaling. Here are three foundational strategies:1. Vertical ScalingThe simplest approach is to beef up your existing Prometheus instance with more memory, CPU, and storage. Here’s a sample configuration for optimizing Prometheus’s performance:global: scrape_interval: 15s evaluation_interval: 15s storage: tsdb: retention: time: 15d size: 512GB wal-compression: true exemplars: max-exemplars: 100000 query: max-samples: 50000000 timeout: 2mKey Considerations:Monitor TSDB Compaction: Regularly check TSDB compaction metrics, as they’re essential for data storage efficiency.Watch WAL Performance: Keep an eye on WAL metrics to ensure smooth crash recovery.Track Memory Usage: As data volume grows, memory demands will too—tracking this helps avoid resource issues.📝Check out our guide on Prometheus RemoteWrite Exporter to get all the details you need!2. Horizontal Scaling Through FederationFederation allows you to create a multi-tiered Prometheus setup, which is a great way to scale while keeping monitoring organized. Here’s a basic configuration:# Global Prometheus configurationscrape_configs: - job_name: 'federate' scrape_interval: 15s honor_labels: true metrics_path: '/federate' params: 'match[]': - '{job="node"}' - '{job="kubernetes-pods"}' - '{__name__=~"job:.*"}' static_configs: - targets: - 'prometheus-app:9090' - 'prometheus-infra:9090' # Recording rules for federationrules: - record: job:node_memory_utilization:avg expr: avg(node_memory_used_bytes / node_memory_total_bytes)Advanced Scaling SolutionsWhen Prometheus alone isn’t enough, tools like Thanos and Cortex can extend their capabilities for long-term storage and high-demand environments.Thanos Architecture and ImplementationThanos adds long-term storage and global querying. Here’s a basic setup:apiVersion: apps/v1kind: Deploymentmetadata: name: thanos-queryspec: replicas: 3 template: spec: containers: - name: thanos-query image: quay.io/thanos/thanos:v0.24.0 args: - 'query' - '--store=dnssrv+_grpc._tcp.thanos-store' - '--store=dnssrv+_grpc._tcp.thanos-sidecar'Cortex for Cloud-Native DeploymentsIf you’re in a cloud-native environment, Cortex offers the following benefits:Dynamic Scaling: It can scale with your infrastructure automatically.Multi-Tenant Isolation: The Cortex is built for multi-tenancy, keeping each environment isolated.Cloud Storage Integration: Cortex connects seamlessly with cloud storage for long-term retention.Query Caching: It offers query caching to improve performance under heavy load.📖Check out our guide on Prometheus Recording Rules—it's a great resource if you're working with Prometheus and looking to optimize your setup!Practical Performance OptimizationTo keep Prometheus running smoothly, here are some optimization tips:1. Query OptimizationAvoid complex or redundant PromQL queries that could slow down Prometheus. For example:Before:rate(http_requests_total[5m]) or rate(http_requests_total[5m] offset 5m)After:rate(http_requests_total[5m])2. Recording RulesFor frequently-used, heavy queries, recording rules can lighten the load:groups: - name: example rules: - record: job:http_inprogress_requests:sum expr: sum(http_inprogress_requests) by (job)3. Label ManagementAvoid high-cardinality labels, as they can create performance issues.Good Label Usage:metric_name{service="payment", endpoint="/api/v1/pay"}Probo Cuts Monitoring Costs by 90% with Last9 | Last9Read how Probo uses Last9 as an alternative to New Relic and Cloudwatch for infrastructure monitoring.Download PDFMonitoring Your Prometheus InstanceKeeping Prometheus itself healthy requires monitoring key metrics:TSDB Metrics:rate(prometheus_tsdb_head_samples_appended_total[5m]) prometheus_tsdb_head_seriesScrape Performance:To monitor the performance of your scrape targets, use the following query to track the rate of scrapes that exceeded the sample limit:rate(prometheus_target_scrapes_exceeded_sample_limit_total[5m]) prometheus_target_scrape_pool_targetsQuery Performance:To evaluate the performance of your queries, this query measures the rate of query execution duration:rate(prometheus_engine_query_duration_seconds_count[5m])Troubleshooting GuideScaling can introduce new challenges, so here are some common issues and quick solutions to keep Prometheus running smoothly:High Memory UsageHigh memory consumption often points to high-cardinality metrics or inefficient queries. Here are some steps to diagnose and mitigate:# Check series cardinalitycurl -G http://localhost:9090/api/v1/status/tsdb # Monitor memory usage in real-timecontainer_memory_usage_bytes{container="prometheus"}Tip: Keep an eye on your metrics’ labels and reduce unnecessary ones. High-cardinality labels can quickly inflate memory use.Slow QueriesIf queries are slowing down, it’s time to check what’s running under the hood:# Enable query logging for insights into problematic queries--query.log-queries=true # Monitor query performance to spot bottlenecksrate(prometheus_engine_query_duration_seconds_sum[5m])Tip: Implement recording rules to pre-compute frequently accessed metrics, reducing load on Prometheus when running complex queries.ConclusionScaling Prometheus isn’t just about adding more power—it’s about understanding when and how to grow to fit your needs. With the right strategies, you’ll keep Prometheus performing well, no matter how your infrastructure grows.🤝If you’re keen to chat or have any questions, feel free to join our Discord community! We have a dedicated channel where you can connect with other developers and discuss your specific use cases.FAQsCan you scale Prometheus?Yes! Prometheus can be scaled both vertically (by increasing resources on a single instance) and horizontally (through federation or by using solutions like Thanos or Cortex for distributed setups).How well does Prometheus scale?Prometheus scales effectively for most use cases, especially when combined with federation for hierarchical setups or long-term storage solutions like Thanos. However, it’s ideal for monitoring individual services and clusters rather than being a one-size-fits-all centralized solution.What is Federated Prometheus?Federated Prometheus refers to a setup where multiple Prometheus servers work in a hierarchical structure. Each “child” instance gathers data from a specific part of your infrastructure, and a “parent” Prometheus instance collects summaries, making it easier to manage large, distributed environments.Is Prometheus pull or push?Prometheus operates on a pull-based model, meaning it scrapes (pulls) metrics from endpoints at regular intervals, rather than having metrics pushed to it.How can you orchestrate Prometheus?You can orchestrate Prometheus on Kubernetes using custom resources like Prometheus Operator, which simplifies the deployment, configuration, and management of Prometheus and related services.What is the default Prometheus configuration?In its default configuration, Prometheus has a retention period of 15 days for time-series data, uses local storage, and scrapes metrics every 1 minute. However, these settings can be customized based on your needs.What is the difference between Prometheus and Graphite?Prometheus and Graphite both handle time-series data but have different design philosophies. Prometheus uses a pull model, has its query language (PromQL), and supports alerting natively, while Graphite uses a push model and relies on external tools for alerting and query functionalities.How does Prometheus compare to Ganglia?Prometheus is more modern and flexible than Ganglia, especially in dynamic, containerized environments. Prometheus offers better support for cloud-native systems, more powerful query capabilities, and better integration with Kubernetes.What is the best way to integrate Prometheus with your organization's existing monitoring system?Integrate Prometheus with existing systems using exporters, AlertManager for notifications, and tools like Grafana for visualizations. Additionally, consider using Federation or Thanos to bridge Prometheus data with other systems.What are the benefits of Federated Prometheus?Federated Prometheus offers scalable monitoring for large, distributed environments. It enables targeted scraping across multiple Prometheus instances, reduces data redundancy, and optimizes resource usage by dividing and conquering.SubscribeDownload PDF

If you’re here, it’s safe to say your monitoring setup is facing some growing pains. Scaling Prometheus isn’t exactly plug-and-play—especially if your Kubernetes clusters or microservices are multiplying like bunnies. The more your infrastructure expands, the more you need a monitoring solution to keep up without buckling under the pressure.In this guide, we’ll talk about the whys and the hows of scaling Prometheus. We'll dig into the underlying concepts that make scaling Prometheus possible, plus the nuts-and-bolts strategies that make it work in the real world. Ready to level up your monitoring game?Understanding Prometheus ArchitectureBefore we jump into scaling Prometheus, let’s take a peek under the hood to see what makes it tick. Prometheus Core ComponentsCore ComponentsTime Series Database (TSDB)Data Storage: Prometheus’s TSDB isn’t your typical database—it’s designed specifically for handling time-series data. It stores metrics in a custom format optimized for quick access.Crash Recovery: It uses a Write-Ahead Log (WAL), which acts like a safety net, ensuring that your data stays intact even during unexpected crashes.Data Blocks: Instead of lumping all data together, TSDB organizes metrics in manageable, 2-hour blocks. This way, querying and processing data stay efficient, even as your data volume grows.ScraperMetric Collection: The scraper component is like Prometheus’s ears and eyes, continuously pulling metrics from predefined endpoints.Service Discovery: It handles automatic service discovery, so Prometheus always knows where to find new services without needing constant reconfiguration.Scrape Configurations: The scraper also lets you define scrape intervals and timeouts, tailoring how often data is collected based on your system’s needs.PromQL EngineQuery Processing: The PromQL engine is where all your queries get processed, making sense of the data stored in TSDB.Aggregations & Transformations: It’s built for powerful data transformations and aggregations, making it possible to slice and dice metrics in almost any way you need.Time-Based Operations: PromQL’s time-based capabilities let you compare metrics over different periods—a must-have for spotting trends or anomalies.💡If you're looking for setting up and configuring Alertmanager, we’ve got a handy guide that walks you through the process—check it out!The Pull Model ExplainedPrometheus uses a pull model, meaning it actively scrapes metrics from your endpoints rather than waiting for metrics to be pushed. This model is perfect for controlled, precise monitoring. Here’s an example configuration:scrape_configs: - job_name: 'node' static_configs: - targets: ['localhost:9100'] scrape_interval: 15s scrape_timeout: 10s metrics_path: /metrics scheme: httpBenefits of the Pull Model:Control Over Failure Detection: Prometheus can detect if a target fails to respond, giving you insight into the health of your endpoints.Firewall Friendliness: It’s generally easier to allow one-way traffic for scrapes than to configure permissions for every component.Simple Testing: You can verify endpoint availability and scrape configurations without a lot of troubleshooting.Scaling Strategies:When Prometheus starts to feel the weight of growing data and queries, it’s time to explore scaling. Here are three foundational strategies:1. Vertical ScalingThe simplest approach is to beef up your existing Prometheus instance with more memory, CPU, and storage. Here’s a sample configuration for optimizing Prometheus’s performance:global: scrape_interval: 15s evaluation_interval: 15s storage: tsdb: retention: time: 15d size: 512GB wal-compression: true exemplars: max-exemplars: 100000 query: max-samples: 50000000 timeout: 2mKey Considerations:Monitor TSDB Compaction: Regularly check TSDB compaction metrics, as they’re essential for data storage efficiency.Watch WAL Performance: Keep an eye on WAL metrics to ensure smooth crash recovery.Track Memory Usage: As data volume grows, memory demands will too—tracking this helps avoid resource issues.📝Check out our guide on Prometheus RemoteWrite Exporter to get all the details you need!2. Horizontal Scaling Through FederationFederation allows you to create a multi-tiered Prometheus setup, which is a great way to scale while keeping monitoring organized. Here’s a basic configuration:# Global Prometheus configurationscrape_configs: - job_name: 'federate' scrape_interval: 15s honor_labels: true metrics_path: '/federate' params: 'match[]': - '{job="node"}' - '{job="kubernetes-pods"}' - '{__name__=~"job:.*"}' static_configs: - targets: - 'prometheus-app:9090' - 'prometheus-infra:9090' # Recording rules for federationrules: - record: job:node_memory_utilization:avg expr: avg(node_memory_used_bytes / node_memory_total_bytes)Advanced Scaling SolutionsWhen Prometheus alone isn’t enough, tools like Thanos and Cortex can extend their capabilities for long-term storage and high-demand environments.Thanos Architecture and ImplementationThanos adds long-term storage and global querying. Here’s a basic setup:apiVersion: apps/v1kind: Deploymentmetadata: name: thanos-queryspec: replicas: 3 template: spec: containers: - name: thanos-query image: quay.io/thanos/thanos:v0.24.0 args: - 'query' - '--store=dnssrv+_grpc._tcp.thanos-store' - '--store=dnssrv+_grpc._tcp.thanos-sidecar'Cortex for Cloud-Native DeploymentsIf you’re in a cloud-native environment, Cortex offers the following benefits:Dynamic Scaling: It can scale with your infrastructure automatically.Multi-Tenant Isolation: The Cortex is built for multi-tenancy, keeping each environment isolated.Cloud Storage Integration: Cortex connects seamlessly with cloud storage for long-term retention.Query Caching: It offers query caching to improve performance under heavy load.📖Check out our guide on Prometheus Recording Rules—it's a great resource if you're working with Prometheus and looking to optimize your setup!Practical Performance OptimizationTo keep Prometheus running smoothly, here are some optimization tips:1. Query OptimizationAvoid complex or redundant PromQL queries that could slow down Prometheus. For example:Before:rate(http_requests_total[5m]) or rate(http_requests_total[5m] offset 5m)After:rate(http_requests_total[5m])2. Recording RulesFor frequently-used, heavy queries, recording rules can lighten the load:groups: - name: example rules: - record: job:http_inprogress_requests:sum expr: sum(http_inprogress_requests) by (job)3. Label ManagementAvoid high-cardinality labels, as they can create performance issues.Good Label Usage:metric_name{service="payment", endpoint="/api/v1/pay"}Probo Cuts Monitoring Costs by 90% with Last9 | Last9Read how Probo uses Last9 as an alternative to New Relic and Cloudwatch for infrastructure monitoring.Download PDFMonitoring Your Prometheus InstanceKeeping Prometheus itself healthy requires monitoring key metrics:TSDB Metrics:rate(prometheus_tsdb_head_samples_appended_total[5m]) prometheus_tsdb_head_seriesScrape Performance:To monitor the performance of your scrape targets, use the following query to track the rate of scrapes that exceeded the sample limit:rate(prometheus_target_scrapes_exceeded_sample_limit_total[5m]) prometheus_target_scrape_pool_targetsQuery Performance:To evaluate the performance of your queries, this query measures the rate of query execution duration:rate(prometheus_engine_query_duration_seconds_count[5m])Troubleshooting GuideScaling can introduce new challenges, so here are some common issues and quick solutions to keep Prometheus running smoothly:High Memory UsageHigh memory consumption often points to high-cardinality metrics or inefficient queries. Here are some steps to diagnose and mitigate:# Check series cardinalitycurl -G http://localhost:9090/api/v1/status/tsdb # Monitor memory usage in real-timecontainer_memory_usage_bytes{container="prometheus"}Tip: Keep an eye on your metrics’ labels and reduce unnecessary ones. High-cardinality labels can quickly inflate memory use.Slow QueriesIf queries are slowing down, it’s time to check what’s running under the hood:# Enable query logging for insights into problematic queries--query.log-queries=true # Monitor query performance to spot bottlenecksrate(prometheus_engine_query_duration_seconds_sum[5m])Tip: Implement recording rules to pre-compute frequently accessed metrics, reducing load on Prometheus when running complex queries.ConclusionScaling Prometheus isn’t just about adding more power—it’s about understanding when and how to grow to fit your needs. With the right strategies, you’ll keep Prometheus performing well, no matter how your infrastructure grows.🤝If you’re keen to chat or have any questions, feel free to join our Discord community! We have a dedicated channel where you can connect with other developers and discuss your specific use cases.FAQsCan you scale Prometheus?Yes! Prometheus can be scaled both vertically (by increasing resources on a single instance) and horizontally (through federation or by using solutions like Thanos or Cortex for distributed setups).How well does Prometheus scale?Prometheus scales effectively for most use cases, especially when combined with federation for hierarchical setups or long-term storage solutions like Thanos. However, it’s ideal for monitoring individual services and clusters rather than being a one-size-fits-all centralized solution.What is Federated Prometheus?Federated Prometheus refers to a setup where multiple Prometheus servers work in a hierarchical structure. Each “child” instance gathers data from a specific part of your infrastructure, and a “parent” Prometheus instance collects summaries, making it easier to manage large, distributed environments.Is Prometheus pull or push?Prometheus operates on a pull-based model, meaning it scrapes (pulls) metrics from endpoints at regular intervals, rather than having metrics pushed to it.How can you orchestrate Prometheus?You can orchestrate Prometheus on Kubernetes using custom resources like Prometheus Operator, which simplifies the deployment, configuration, and management of Prometheus and related services.What is the default Prometheus configuration?In its default configuration, Prometheus has a retention period of 15 days for time-series data, uses local storage, and scrapes metrics every 1 minute. However, these settings can be customized based on your needs.What is the difference between Prometheus and Graphite?Prometheus and Graphite both handle time-series data but have different design philosophies. Prometheus uses a pull model, has its query language (PromQL), and supports alerting natively, while Graphite uses a push model and relies on external tools for alerting and query functionalities.How does Prometheus compare to Ganglia?Prometheus is more modern and flexible than Ganglia, especially in dynamic, containerized environments. Prometheus offers better support for cloud-native systems, more powerful query capabilities, and better integration with Kubernetes.What is the best way to integrate Prometheus with your organization's existing monitoring system?Integrate Prometheus with existing systems using exporters, AlertManager for notifications, and tools like Grafana for visualizations. Additionally, consider using Federation or Thanos to bridge Prometheus data with other systems.What are the benefits of Federated Prometheus?Federated Prometheus offers scalable monitoring for large, distributed environments. It enables targeted scraping across multiple Prometheus instances, reduces data redundancy, and optimizes resource usage by dividing and conquering.SubscribeDownload PDF

Getting Started with Host Metrics Using OpenTelemetry | Last9

Learn to monitor host metrics with OpenTelemetry. Discover setup tips, common pitfalls, and best practices for effective observability.

After years of working with monitoring solutions at both startups and big-name companies, I've realized something important: knowing why you’re doing something is just as crucial as knowing how to do it. When we talk about host metrics monitoring, it’s not just about gathering data; it’s about figuring out what that data means and why it’s important for you.In this guide, I want to help you make sense of host metrics monitoring with OpenTelemetry Collector. We'll explore how these metrics can give you valuable insights into your systems and help you keep everything running smoothly.What are Host Metrics?Host metrics represent essential performance indicators of a server’s operation. These metrics help monitor resource utilization and system behavior, allowing for effective infrastructure management.Key host metrics include:CPU Utilization: The percentage of CPU capacity being used.Memory Usage: The amount of RAM consumed by the system.Filesystem: Disk space availability and I/O operations.Network Interface: Data transfer rates and network connectivity.Process CPU: CPU usage of individual system processes.These metrics provide critical insights into the performance and operational status of a host.What are OpenTelemetry Metrics? A Comprehensive Guide | Last9Learn about OpenTelemetry Metrics, types of instruments, and best practices for effective application performance monitoring and observability.Last9Book a DemoThe OpenTelemetry Collector ArchitectureThe OpenTelemetry Collector operates on a pipeline architecture, designed to collect, process, and export telemetry data efficiently.Key ComponentsReceivers: These are the entry points for data collection, responsible for gathering metrics, logs, and traces from various sources. For host metrics, we use the host metrics receiver.Processors: These components handle data transformation, enrichment, or aggregation. They can apply modifications such as batching, filtering, or adding metadata before forwarding the data to the exporters.Exporters: These send the processed telemetry data to a destination, such as Prometheus, OTLP (OpenTelemetry Protocol), or other monitoring and observability platforms.The data flow through the pipeline looks like this:Otel Collector ArchitectureImplementing OpenTelemetry in Your Environment1. Basic Setup (Development Environment)To start with a simple local setup, here’s a minimal configuration that I use for development. It includes the hostmetrics receiver for collecting basic metrics like CPU and memory usage, with the data exported to Prometheus.# config.yaml - Development Setupreceivers: hostmetrics: collection_interval: 30s scrapers: cpu: {} memory: {}processors: resource: attributes: - action: insert key: service.name value: "host-monitor-dev" - action: insert key: env value: "development"exporters: prometheus: endpoint: "0.0.0.0:8889"service: pipelines: metrics: receivers: [hostmetrics] processors: [resource] exporters: [prometheus]Start the OpenTelemetry Collector with debug logging to ensure everything works correctly:# Start the collector with debug loggingotelcol-contrib --config config.yaml --set=service.telemetry.logs.level=debugOpenTelemetry Collector: The Complete Guide | Last9This guide covers the OpenTelemetry Collector, its features, use cases, and tips for effectively managing telemetry data.Last92. Production ConfigurationFor production, a more robust setup is recommended, incorporating multiple exporters and enhanced metadata for better observability. This example adds more detailed metrics and uses environment-specific variables.# config.yaml - Production Setupreceivers: hostmetrics: collection_interval: 10s scrapers: cpu: metrics: system.cpu.utilization: enabled: true memory: metrics: system.memory.utilization: enabled: true disk: {} filesystem: {} network: metrics: system.network.io: enabled: trueprocessors: resource: attributes: - action: insert key: service.name value: ${SERVICE_NAME} - action: insert key: environment value: ${ENV} resourcedetection: detectors: [env, system, gcp, azure] timeout: 2sexporters: otlp: endpoint: ${OTLP_ENDPOINT} tls: insecure: ${OTLP_INSECURE} prometheus: endpoint: "localhost:8889"service: pipelines: metrics: receivers: [hostmetrics] processors: [resource, resourcedetection] exporters: [otlp, prometheus]This production configuration ensures low-latency metric collection and supports exporting to both Prometheus and an OTLP-compatible endpoint, useful for integrating with larger observability platforms.3. Kubernetes DeploymentFor Kubernetes environments, deploying the OpenTelemetry Collector as a DaemonSet ensures that metrics are gathered from every node in the cluster. Below is a configuration for deploying the collector on Kubernetes.ConfigMap for Collector ConfigurationThe ConfigMap contains the collector configuration, defining how the metrics are scraped and where they are exported.# kubernetes/collector-config.yamlapiVersion: v1kind: ConfigMapmetadata: name: otel-collector-configdata: config.yaml: | receivers: hostmetrics: collection_interval: 30s scrapers: cpu: {} memory: {} disk: {} filesystem: {} network: {} process: {} processors: resource: attributes: - action: insert key: cluster.name value: ${CLUSTER_NAME} resourcedetection: detectors: [kubernetes] timeout: 5s exporters: otlp: endpoint: ${OTLP_ENDPOINT} tls: insecure: false cert_file: /etc/certs/collector.crt key_file: /etc/certs/collector.key service: pipelines: metrics: receivers: [hostmetrics] processors: [resource, resourcedetection] exporters: [otlp]This ConfigMap defines the receivers, processors, and exporters necessary for collecting host metrics from the nodes and sending them to an OTLP endpoint. The resource processor adds metadata about the cluster, while the resourcedetection processor uses the Kubernetes detector to gather node-specific metadata.OpenTelemetry Protocol (OTLP): A Deep Dive into Observability | Last9Learn about OTLP’s key features, and how it simplifies telemetry data handling, and get practical tips for implementation.Last9DaemonSet for Collector DeploymentThe DaemonSet ensures that one instance of the collector runs on every node in the cluster.# kubernetes/daemonset.yamlapiVersion: apps/v1kind: DaemonSetmetadata: name: otel-collectorspec: selector: matchLabels: app: otel-collector template: metadata: labels: app: otel-collector spec: containers: - name: otel-collector image: otel/opentelemetry-collector-contrib:latest resources: limits: cpu: 200m memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: config mountPath: /etc/otelcol/config.yaml subPath: config.yaml env: - name: CLUSTER_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: OTLP_ENDPOINT value: "your-otlp-endpoint" volumes: - name: config configMap: name: otel-collector-configIn this DaemonSet configuration:The otel-collector container is deployed on every node.The CLUSTER_NAME environment variable is injected dynamically based on the node’s metadata.Metrics are exported to the specified OTLP endpoint, making it compatible with cloud-based or self-hosted observability backends.This setup ensures an efficient collection of host metrics from all nodes in a Kubernetes cluster, making it ideal for large-scale environments.Common Pitfalls and SolutionsWhen implementing the OpenTelemetry Collector in a production environment, there are several common challenges you might encounter. Here’s a look at these pitfalls along with solutions to address them.1. High CPU Usage in ProductionIssue: If you notice high CPU utilization from the collector itself, it may be due to a short collection interval that generates excessive load.Solution: Adjust the collection interval to reduce the frequency of data collection, allowing the collector to operate more efficiently.receivers: hostmetrics: collection_interval: 60s # Increase from default 30s2. Missing Permissions on LinuxIssue: When running the OpenTelemetry Collector without root privileges, you might encounter permission errors, particularly when trying to access disk metrics.Solution: Grant the necessary capabilities to the collector binary to allow it to access the required system resources.# Add capabilities for disk metricssudo setcap cap_dac_read_search=+ep /usr/local/bin/otelcol-contribHow to Use Jaeger with OpenTelemetry | Last9This guide shows you how to easily use Jaeger with OpenTelemetry for improved tracing and application monitoring.Last93. Memory Leaks in Long-Running InstancesIssue: In some cases, long-running instances of the collector can exhibit memory leaks, especially if too many processors are configured.Solution: Optimize the processor chain to include memory-limiting configurations to prevent excessive memory usage.processors: batch: timeout: 10s send_batch_size: 1024 memory_limiter: check_interval: 5s limit_mib: 150Best Practices from ProductionWhen deploying the OpenTelemetry Collector, adhering to best practices can significantly improve the efficiency and reliability of your monitoring setup. Here are some recommendations based on production experience:1. Resource AttributionAlways use the resource detection processor to automatically identify and enrich telemetry data with cloud metadata. This enhances the context of the metrics collected, making it easier to understand the performance and health of your applications within the infrastructure.processors: resourcedetection: detectors: [env, system, gcp, azure] timeout: 2s2. Monitoring the MonitorSet up alerting mechanisms on the collector's own metrics. By monitoring the health and performance of the collector itself, you can quickly identify issues before they affect your overall observability stack.3. Graceful DegradationConfigure timeout and retry policies for exporters to ensure that transient issues don’t lead to data loss. Implementing these policies allows the system to handle temporary failures gracefully without impacting the overall monitoring setup.exporters: otlp: endpoint: ${OTLP_ENDPOINT} tls: insecure: ${OTLP_INSECURE} timeout: 10s retry_on_failure: enabled: true attempts: 3 interval: 5s4. Versioning and UpgradesRegularly update the OpenTelemetry Collector to leverage the latest features, improvements, and bug fixes. Always test new versions in a staging environment before rolling them out to production to ensure compatibility with your existing setup.5. Configuration ManagementMaintain a version-controlled repository for your configuration files. This allows for easier tracking of changes, rollbacks when necessary, and collaboration across teams.Reliable Observability for 25+ million concurrent live-streaming viewers | Last9How we’ve tackled high cardinality metrics with long-term retention for one of the largest video streaming companies.Download PDFConclusionGetting a handle on host metrics monitoring with OpenTelemetry is like having a roadmap for your system’s performance. With the right setup, you’ll not only collect valuable data but also gain insights that can help you make smarter decisions about your infrastructure.🤝If you’re still eager to chat or have questions, our community on Discord is open. We’ve got a dedicated channel where you can have discussions with other developers about your specific use case.FAQsWhat does an OpenTelemetry Collector do?The OpenTelemetry Collector is like a central hub for telemetry data. It receives, processes, and exports logs, metrics, and traces from various sources, making it easier to monitor and analyze your systems without being tied to a specific vendor.What are host metrics?Host metrics are performance indicators that give you a glimpse into how your server is doing. They include things like CPU utilization, memory usage, disk space availability, network throughput, and the CPU usage of individual processes. These metrics are essential for keeping an eye on resource utilization and ensuring your infrastructure runs smoothly.What is the difference between telemetry and OpenTelemetry?Telemetry is the general term for collecting and sending data from remote sources to a system for monitoring and analysis. OpenTelemetry, on the other hand, is a specific framework with APIs designed for generating, collecting, and exporting telemetry data—logs, metrics, and traces—in a standardized way. It provides developers with the tools to instrument their applications effectively.Is the OpenTelemetry Collector observable?Absolutely! The OpenTelemetry Collector is observable itself. It generates its own telemetry data, such as metrics and logs, that you can monitor to evaluate its performance and health. This includes tracking things like resource usage, processing latency, and error rates, ensuring it operates effectively.What is the difference between OpenTelemetry Collector and Prometheus?While both are important in observability, they play different roles. The OpenTelemetry Collector acts as a data pipeline, collecting, processing, and exporting telemetry data. Prometheus is a monitoring and alerting toolkit specifically designed for storing and querying time-series data. You can scrape metrics from the OpenTelemetry Collector using Prometheus, and the collector can also export metrics directly to Prometheus.How do you configure the OpenTelemetry Collector to monitor host metrics?To configure the OpenTelemetry Collector for host metrics monitoring, set up a hostmetrics receiver in the configuration file. Specify the collection interval and the types of metrics you want to collect (like CPU, memory, disk, and network). Then, configure processors and exporters to handle and send the collected data to your chosen monitoring platform.How do you set up HostMetrics monitoring with the OpenTelemetry Collector?Setting up HostMetrics monitoring is straightforward. Create a configuration file that includes the HostMetrics receiver, define the metrics you want to collect, and specify an exporter to send the data to your monitoring solution (like Prometheus). Once your configuration is ready, start the collector with the file you created.Subscribe

After years of working with monitoring solutions at both startups and big-name companies, I've realized something important: knowing why you’re doing something is just as crucial as knowing how to do it. When we talk about host metrics monitoring, it’s not just about gathering data; it’s about figuring out what that data means and why it’s important for you.In this guide, I want to help you make sense of host metrics monitoring with OpenTelemetry Collector. We'll explore how these metrics can give you valuable insights into your systems and help you keep everything running smoothly.What are Host Metrics?Host metrics represent essential performance indicators of a server’s operation. These metrics help monitor resource utilization and system behavior, allowing for effective infrastructure management.Key host metrics include:CPU Utilization: The percentage of CPU capacity being used.Memory Usage: The amount of RAM consumed by the system.Filesystem: Disk space availability and I/O operations.Network Interface: Data transfer rates and network connectivity.Process CPU: CPU usage of individual system processes.These metrics provide critical insights into the performance and operational status of a host.What are OpenTelemetry Metrics? A Comprehensive Guide | Last9Learn about OpenTelemetry Metrics, types of instruments, and best practices for effective application performance monitoring and observability.Last9Book a DemoThe OpenTelemetry Collector ArchitectureThe OpenTelemetry Collector operates on a pipeline architecture, designed to collect, process, and export telemetry data efficiently.Key ComponentsReceivers: These are the entry points for data collection, responsible for gathering metrics, logs, and traces from various sources. For host metrics, we use the host metrics receiver.Processors: These components handle data transformation, enrichment, or aggregation. They can apply modifications such as batching, filtering, or adding metadata before forwarding the data to the exporters.Exporters: These send the processed telemetry data to a destination, such as Prometheus, OTLP (OpenTelemetry Protocol), or other monitoring and observability platforms.The data flow through the pipeline looks like this:Otel Collector ArchitectureImplementing OpenTelemetry in Your Environment1. Basic Setup (Development Environment)To start with a simple local setup, here’s a minimal configuration that I use for development. It includes the hostmetrics receiver for collecting basic metrics like CPU and memory usage, with the data exported to Prometheus.# config.yaml - Development Setupreceivers: hostmetrics: collection_interval: 30s scrapers: cpu: {} memory: {}processors: resource: attributes: - action: insert key: service.name value: "host-monitor-dev" - action: insert key: env value: "development"exporters: prometheus: endpoint: "0.0.0.0:8889"service: pipelines: metrics: receivers: [hostmetrics] processors: [resource] exporters: [prometheus]Start the OpenTelemetry Collector with debug logging to ensure everything works correctly:# Start the collector with debug loggingotelcol-contrib --config config.yaml --set=service.telemetry.logs.level=debugOpenTelemetry Collector: The Complete Guide | Last9This guide covers the OpenTelemetry Collector, its features, use cases, and tips for effectively managing telemetry data.Last92. Production ConfigurationFor production, a more robust setup is recommended, incorporating multiple exporters and enhanced metadata for better observability. This example adds more detailed metrics and uses environment-specific variables.# config.yaml - Production Setupreceivers: hostmetrics: collection_interval: 10s scrapers: cpu: metrics: system.cpu.utilization: enabled: true memory: metrics: system.memory.utilization: enabled: true disk: {} filesystem: {} network: metrics: system.network.io: enabled: trueprocessors: resource: attributes: - action: insert key: service.name value: ${SERVICE_NAME} - action: insert key: environment value: ${ENV} resourcedetection: detectors: [env, system, gcp, azure] timeout: 2sexporters: otlp: endpoint: ${OTLP_ENDPOINT} tls: insecure: ${OTLP_INSECURE} prometheus: endpoint: "localhost:8889"service: pipelines: metrics: receivers: [hostmetrics] processors: [resource, resourcedetection] exporters: [otlp, prometheus]This production configuration ensures low-latency metric collection and supports exporting to both Prometheus and an OTLP-compatible endpoint, useful for integrating with larger observability platforms.3. Kubernetes DeploymentFor Kubernetes environments, deploying the OpenTelemetry Collector as a DaemonSet ensures that metrics are gathered from every node in the cluster. Below is a configuration for deploying the collector on Kubernetes.ConfigMap for Collector ConfigurationThe ConfigMap contains the collector configuration, defining how the metrics are scraped and where they are exported.# kubernetes/collector-config.yamlapiVersion: v1kind: ConfigMapmetadata: name: otel-collector-configdata: config.yaml: | receivers: hostmetrics: collection_interval: 30s scrapers: cpu: {} memory: {} disk: {} filesystem: {} network: {} process: {} processors: resource: attributes: - action: insert key: cluster.name value: ${CLUSTER_NAME} resourcedetection: detectors: [kubernetes] timeout: 5s exporters: otlp: endpoint: ${OTLP_ENDPOINT} tls: insecure: false cert_file: /etc/certs/collector.crt key_file: /etc/certs/collector.key service: pipelines: metrics: receivers: [hostmetrics] processors: [resource, resourcedetection] exporters: [otlp]This ConfigMap defines the receivers, processors, and exporters necessary for collecting host metrics from the nodes and sending them to an OTLP endpoint. The resource processor adds metadata about the cluster, while the resourcedetection processor uses the Kubernetes detector to gather node-specific metadata.OpenTelemetry Protocol (OTLP): A Deep Dive into Observability | Last9Learn about OTLP’s key features, and how it simplifies telemetry data handling, and get practical tips for implementation.Last9DaemonSet for Collector DeploymentThe DaemonSet ensures that one instance of the collector runs on every node in the cluster.# kubernetes/daemonset.yamlapiVersion: apps/v1kind: DaemonSetmetadata: name: otel-collectorspec: selector: matchLabels: app: otel-collector template: metadata: labels: app: otel-collector spec: containers: - name: otel-collector image: otel/opentelemetry-collector-contrib:latest resources: limits: cpu: 200m memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: config mountPath: /etc/otelcol/config.yaml subPath: config.yaml env: - name: CLUSTER_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: OTLP_ENDPOINT value: "your-otlp-endpoint" volumes: - name: config configMap: name: otel-collector-configIn this DaemonSet configuration:The otel-collector container is deployed on every node.The CLUSTER_NAME environment variable is injected dynamically based on the node’s metadata.Metrics are exported to the specified OTLP endpoint, making it compatible with cloud-based or self-hosted observability backends.This setup ensures an efficient collection of host metrics from all nodes in a Kubernetes cluster, making it ideal for large-scale environments.Common Pitfalls and SolutionsWhen implementing the OpenTelemetry Collector in a production environment, there are several common challenges you might encounter. Here’s a look at these pitfalls along with solutions to address them.1. High CPU Usage in ProductionIssue: If you notice high CPU utilization from the collector itself, it may be due to a short collection interval that generates excessive load.Solution: Adjust the collection interval to reduce the frequency of data collection, allowing the collector to operate more efficiently.receivers: hostmetrics: collection_interval: 60s # Increase from default 30s2. Missing Permissions on LinuxIssue: When running the OpenTelemetry Collector without root privileges, you might encounter permission errors, particularly when trying to access disk metrics.Solution: Grant the necessary capabilities to the collector binary to allow it to access the required system resources.# Add capabilities for disk metricssudo setcap cap_dac_read_search=+ep /usr/local/bin/otelcol-contribHow to Use Jaeger with OpenTelemetry | Last9This guide shows you how to easily use Jaeger with OpenTelemetry for improved tracing and application monitoring.Last93. Memory Leaks in Long-Running InstancesIssue: In some cases, long-running instances of the collector can exhibit memory leaks, especially if too many processors are configured.Solution: Optimize the processor chain to include memory-limiting configurations to prevent excessive memory usage.processors: batch: timeout: 10s send_batch_size: 1024 memory_limiter: check_interval: 5s limit_mib: 150Best Practices from ProductionWhen deploying the OpenTelemetry Collector, adhering to best practices can significantly improve the efficiency and reliability of your monitoring setup. Here are some recommendations based on production experience:1. Resource AttributionAlways use the resource detection processor to automatically identify and enrich telemetry data with cloud metadata. This enhances the context of the metrics collected, making it easier to understand the performance and health of your applications within the infrastructure.processors: resourcedetection: detectors: [env, system, gcp, azure] timeout: 2s2. Monitoring the MonitorSet up alerting mechanisms on the collector's own metrics. By monitoring the health and performance of the collector itself, you can quickly identify issues before they affect your overall observability stack.3. Graceful DegradationConfigure timeout and retry policies for exporters to ensure that transient issues don’t lead to data loss. Implementing these policies allows the system to handle temporary failures gracefully without impacting the overall monitoring setup.exporters: otlp: endpoint: ${OTLP_ENDPOINT} tls: insecure: ${OTLP_INSECURE} timeout: 10s retry_on_failure: enabled: true attempts: 3 interval: 5s4. Versioning and UpgradesRegularly update the OpenTelemetry Collector to leverage the latest features, improvements, and bug fixes. Always test new versions in a staging environment before rolling them out to production to ensure compatibility with your existing setup.5. Configuration ManagementMaintain a version-controlled repository for your configuration files. This allows for easier tracking of changes, rollbacks when necessary, and collaboration across teams.Reliable Observability for 25+ million concurrent live-streaming viewers | Last9How we’ve tackled high cardinality metrics with long-term retention for one of the largest video streaming companies.Download PDFConclusionGetting a handle on host metrics monitoring with OpenTelemetry is like having a roadmap for your system’s performance. With the right setup, you’ll not only collect valuable data but also gain insights that can help you make smarter decisions about your infrastructure.🤝If you’re still eager to chat or have questions, our community on Discord is open. We’ve got a dedicated channel where you can have discussions with other developers about your specific use case.FAQsWhat does an OpenTelemetry Collector do?The OpenTelemetry Collector is like a central hub for telemetry data. It receives, processes, and exports logs, metrics, and traces from various sources, making it easier to monitor and analyze your systems without being tied to a specific vendor.What are host metrics?Host metrics are performance indicators that give you a glimpse into how your server is doing. They include things like CPU utilization, memory usage, disk space availability, network throughput, and the CPU usage of individual processes. These metrics are essential for keeping an eye on resource utilization and ensuring your infrastructure runs smoothly.What is the difference between telemetry and OpenTelemetry?Telemetry is the general term for collecting and sending data from remote sources to a system for monitoring and analysis. OpenTelemetry, on the other hand, is a specific framework with APIs designed for generating, collecting, and exporting telemetry data—logs, metrics, and traces—in a standardized way. It provides developers with the tools to instrument their applications effectively.Is the OpenTelemetry Collector observable?Absolutely! The OpenTelemetry Collector is observable itself. It generates its own telemetry data, such as metrics and logs, that you can monitor to evaluate its performance and health. This includes tracking things like resource usage, processing latency, and error rates, ensuring it operates effectively.What is the difference between OpenTelemetry Collector and Prometheus?While both are important in observability, they play different roles. The OpenTelemetry Collector acts as a data pipeline, collecting, processing, and exporting telemetry data. Prometheus is a monitoring and alerting toolkit specifically designed for storing and querying time-series data. You can scrape metrics from the OpenTelemetry Collector using Prometheus, and the collector can also export metrics directly to Prometheus.How do you configure the OpenTelemetry Collector to monitor host metrics?To configure the OpenTelemetry Collector for host metrics monitoring, set up a hostmetrics receiver in the configuration file. Specify the collection interval and the types of metrics you want to collect (like CPU, memory, disk, and network). Then, configure processors and exporters to handle and send the collected data to your chosen monitoring platform.How do you set up HostMetrics monitoring with the OpenTelemetry Collector?Setting up HostMetrics monitoring is straightforward. Create a configuration file that includes the HostMetrics receiver, define the metrics you want to collect, and specify an exporter to send the data to your monitoring solution (like Prometheus). Once your configuration is ready, start the collector with the file you created.Subscribe

Prometheus RemoteWrite Exporter: A Comprehensive Guide | Last9

A comprehensive guide showing how to use PrometheusRemoteWriteExporter to send metrics from OpenTelemetry to Prometheus compatible backends